Network Connector

Configuring SearchBlox

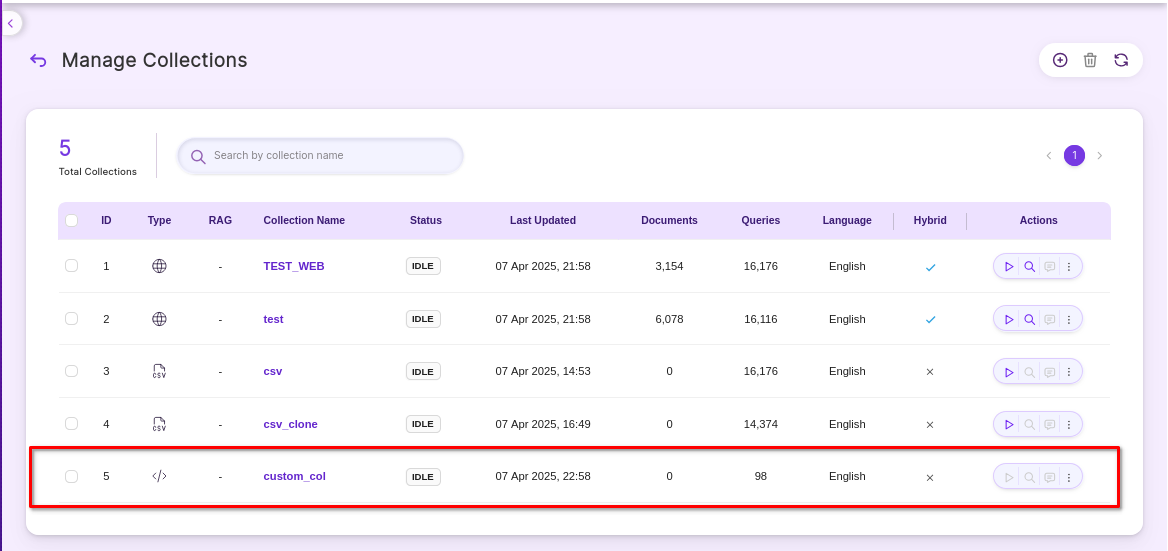

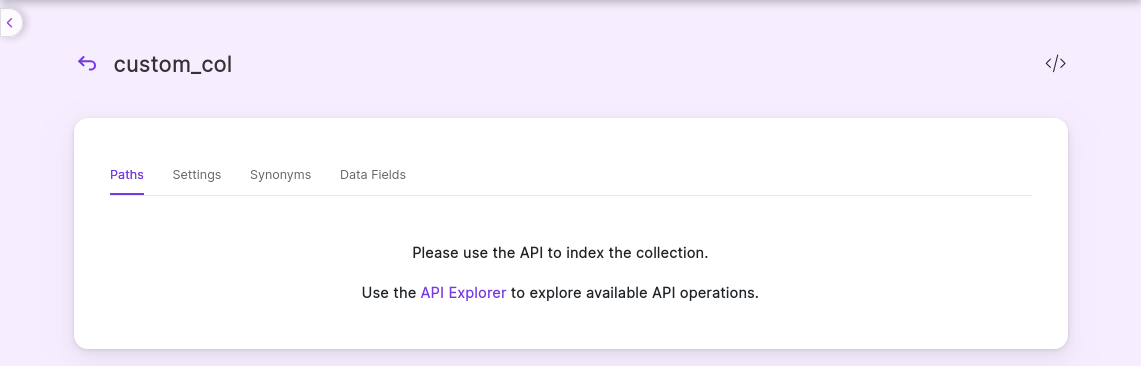

Create Custom Collection

Navigate to: Collections → Create New Collection

Select Custom Collection type Custom Collection.

Installing the Network Crawler

Contact [email protected] to get the download link for SearchBlox-network-crawler.

For Linux Systems:

# 1. Create installation directory

sudo mkdir -p /opt/searchblox-network

# 2. Download the latest network crawler package

# 3. Extract the package

sudo unzip /tmp/searchblox-network-crawler-latest.zip -d /opt/searchblox-network

# 4. Set permissions

sudo chown -R searchblox:searchblox /opt/searchblox-network

sudo chmod -R 755 /opt/searchblox-network/bin

For Windows Systems:

Create folder C:\\searchblox-network

Download the Windows package

Extract the ZIP contents to C:\\searchblox-network

Configuring SMB

The extracted folder will contain a folder named /conf, which contains all the configurations needed for the crawler.

Locating the Config File

Linux: /opt/searchblox-network/conf/config.yml

Windows: C:\searchblox-network\conf\config.yml

- Config.yml

This is the configuration file that is used to map SearchBlox to the network crawler. Edit the file in your favorite editor.

| Field | Description |

|---|---|

apikey | The API Key of your SearchBlox instance. Found in the Admin tab. |

colname | Name of the custom collection you created. |

colid | The Collection ID of your collection. Found in the Collections tab near the collection name. |

url | The URL of your SearchBlox instance. |

sbpkey | The SB-PKEY of your SearchBlox instance. Found in the Users tab (Admin users only). Create an admin user if needed. |

apikey: DD7B0E5E6BB786F10D70A86399806591

colname: custom

colid: 2

url: https://localhost:8443/

sbpkey: MNiwiA0TNlIBG0jZpWVPNuszaT/jT39G03kpF01gUpjGQK8+ZSKtQMNVqKxxke/wEthSWw==

- searchblox.yml

This is the Opensearch configuration file that is used by SearchBlox network crawler. Edit the file in your favorite editor.

| Setting | Description |

|---|---|

searchblox.elasticsearch.url | URL used by Opensearch with port. Configure if using IP or domain. |

searchblox.elasticsearch.host | Hostname used for Opensearch. |

searchblox.elasticsearch.port | Port used for Opensearch. |

searchblox.elasticsearch.basic.username | Username for Opensearch. |

searchblox.elasticsearch.basic.password | Password for Opensearch. |

es.home | Windows or Linux path. For Linux: /opt/searchblox/opensearch. Adjust based on your OS. |

searchblox.elasticsearch.host: localhost

searchblox.elasticsearch.port: 9200

searchblox.elasticsearch.basic.username: admin

searchblox.elasticsearch.basic.password: xxxxxxx

es.home: C:\SearchBloxServer\opensearch

- windowsshare.yml

Enter the details of the domain server, authentication domain, username, password, folder path, disallow path, allowed format and recrawl interval in C:/searchblox-network/conf/windowsshare.yml. You can also enter details of more than one server, or more than one path in same server, in windowsshare.yml file.

You can find the details in the content of the file as shown here.

//The recrawl interval in days.

recrawl : 1

servers:

//The IP or domain of the Server.

- server: 89.107.56.109

//The authentication domain if available it can be optional

authentication-domain:

//The Administrator Username

username: administrator

//The Administrator password

password: xxxxxxxx

//The Folder path where the data need to be indexed.

shared-folder-path: [/test/jason/pencil/]

//The disallow path inside the path that needed to be indexer.

disallow-path: [/admin/,/js/]

//The file formats that need to be allowed for indexing

allowed-format: [ txt,doc,docx,xls,xlsx,xltm,ppt,pptx,html,htm,pdf,odt,ods,rtf,vsd,xlsm,mpp,pps,one,potx,pub,pptm,odp,dotx,csv,docm,pot ]

//Details of another server or another AD path to crawl

- server: 89.107.56.109

authentication-domain:

username: administrator

password: xxxxxxxxxx

shared-folder-path: [/test/jason/newone/]

disallow-path: [/admin/,/js/]

allowed-format: [ txt,doc,docx,xls,xlsx,xltm,ppt,pptx,html,htm,pdf,odt,ods,rtf,vsd,xlsm,mpp,pps,one,potx,pub,pptm,odp,dotx,csv,docm,pot ]

Starting the Crawler

To start the crawler:

On Linux, run start.sh.

On Windows, run start.bat.

The crawler runs in the background. Logs are available in the logs folder.

Note

Single Instance Limitation:

Only one network crawler can run at a time. To crawl different paths or servers, update the configurations in Windowsshare.yml.Re-running for a New Collection:

Before re-running the crawler for a different collection, delete the sb_network index using an Elasticsearch-compatible tool.Stopping the Crawler:

The network connector must be stopped manually when no longer needed.Plain Password Requirement:

If your server restricts plain-text passwords, enable them by adding the following parameter to start.bat:-Djcifs.smb.client.disablePlainTextPasswords=false

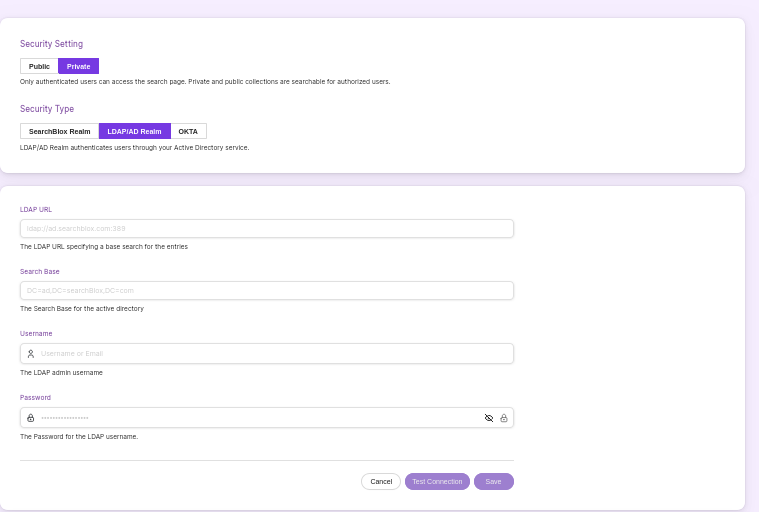

Searching Securely Using SearchBlox

To enable Secure Search using Active Directory:

- Navigate to Search → Security Settings.

- Check the Enable Secured Search option.

- Enter the required LDAP configuration details.

- Test the connection to verify settings.

Once enabled, Secure Search will function based on your Active Directory configuration.

- Enter the Active Directory details

| Lable | Description |

|---|---|

| LDAP URL | LDAP URL that specifies base search for the entries |

| Search Base | Search Base for the active directory |

| Username | Admin username |

| Password | Password for the username |

| Filter-Type | Filter type could be default or document. |

| Enable document filter | Enable this option to filter search results based on users |

- Once you setup security groups, Login using AD credentials here:

https://localhost:8443/search

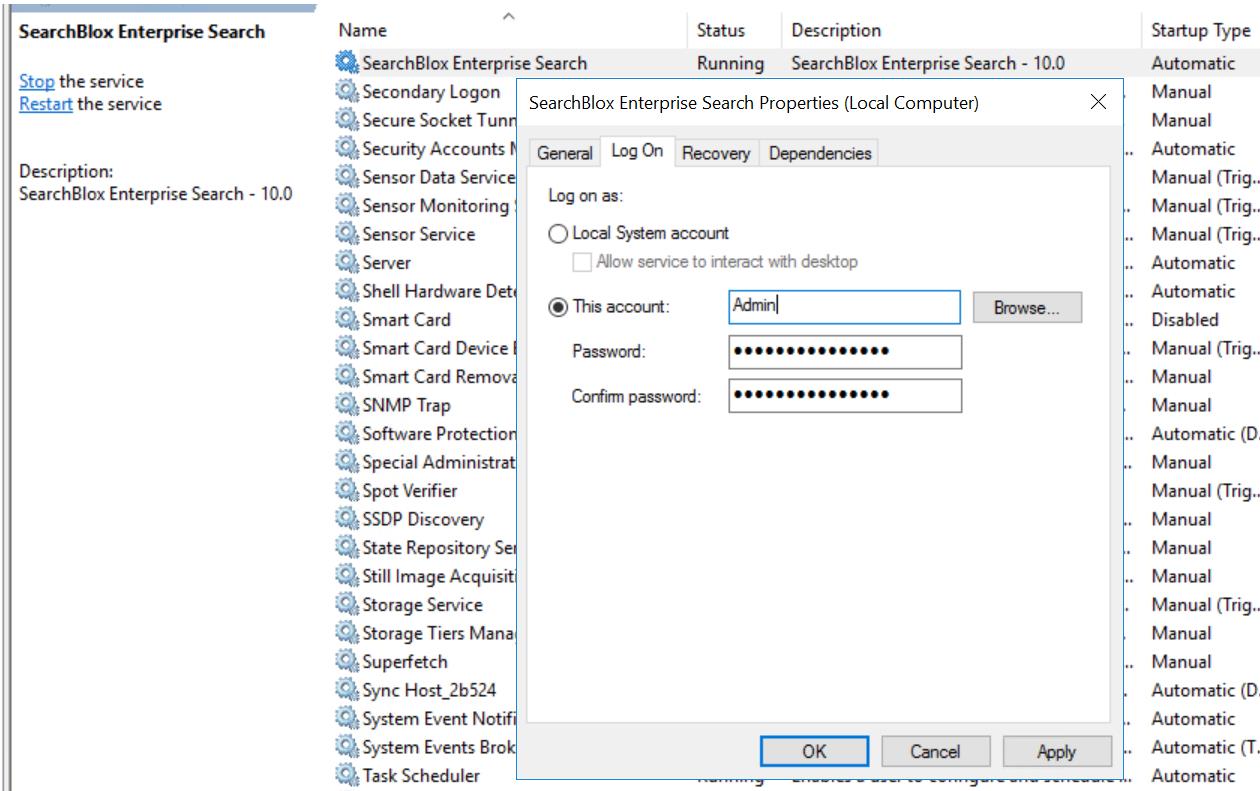

Admin Access to File Share

To index files from an SMB share that requires authentication:

- Run SearchBlox Service with Admin Access

Ensure the SearchBlox server service is running under an Admin account or an account with read access to the shared files.

Enter the required credentials when prompted. - Run the Network Crawler with Admin Privileges

Similarly, execute the network crawler under an Admin account (or an account with sufficient permissions) to successfully crawl and index the files.

How to increase memory in Network Connector

For Windows

- Navigate to:

<network_crawler_installationPath>/start.bat - Locate the line:

rem set JAVA_OPTS=%JAVA_OPTS% -Xms1G -Xmx1G - Remove rem to uncomment and adjust memory (e.g., 2G or 3G):

set JAVA_OPTS=%JAVA_OPTS% -Xms2G -Xmx2G

For Linux

- Navigate to:

<network_crawler_installationPath>/start.sh - Uncomment and modify the line:

JAVA_OPTS="$JAVA_OPTS -Xms2G -Xmx2G"

Note: Adjust values (2G, 3G, etc.) based on available system resources.

Delete sb_network to rerun the crawler in another collection.

Prerequisite Steps:

- Delete Existing Index

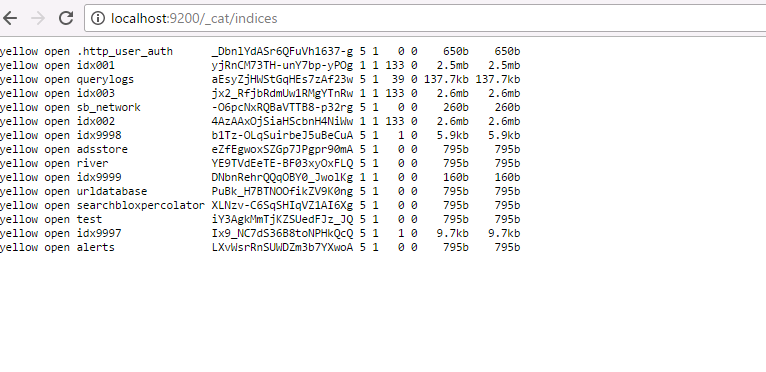

Before re-running the crawler for a different collection, you must first delete the existing sb_network index using an Elasticsearch-compatible tool. - Verify Index Existence

You can check if the index exists by visiting & look for the sb_network index in the response.https://localhost:9200/_cat/indices

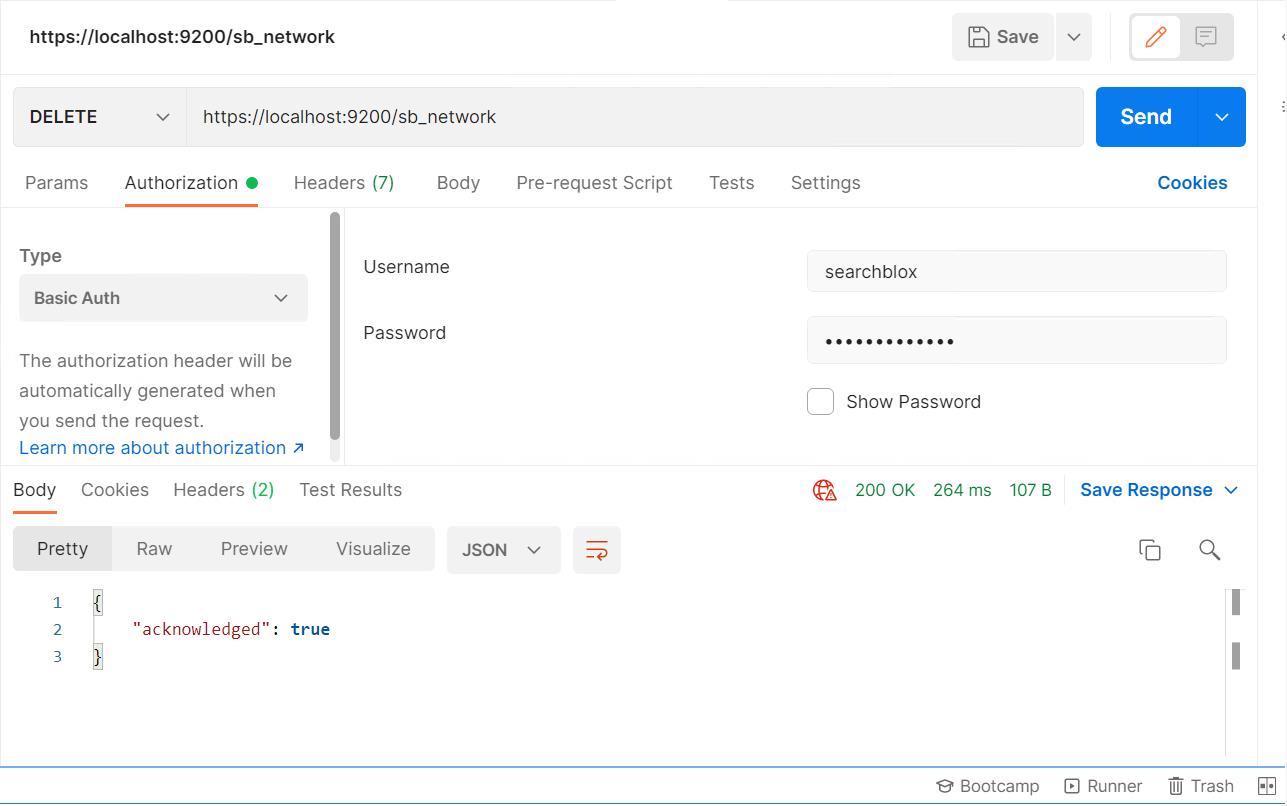

Postman can be used to access Opensearch.

Start Postman and create a Postman request to delete an index, use the DELETE command as shown here:

Look for the "acknowledged": "true" message.

Check https://localhost:9200/_cat/indices; sb_network index should not be available among the indices.

Rerun the crawler after making necessary changes to your config.yml.

Updated 11 months ago