Dynamic Auto Collection

SearchBlox includes a web crawler to index content from any intranet, portal, or website. The crawler can also index HTTPS-based content without any additional configuration and crawl through a proxy server or Form Authentication.

The Dynamic Auto Collection feature enhances secure content indexing by:

- Authenticating and crawling content protected by web forms

- Processing each page as a separate document

- Indexing pages after complete rendering

It supports Single Page Applications (SPAs) along with the dynamically loaded JavaScript pages.

For LINUX:

Please make sure to run the following command to install the browser dependencies, which is required for

Dynamic Auto Collectionwithout form URL:

sudo apt-get install libxkbcommon0 libgbm1

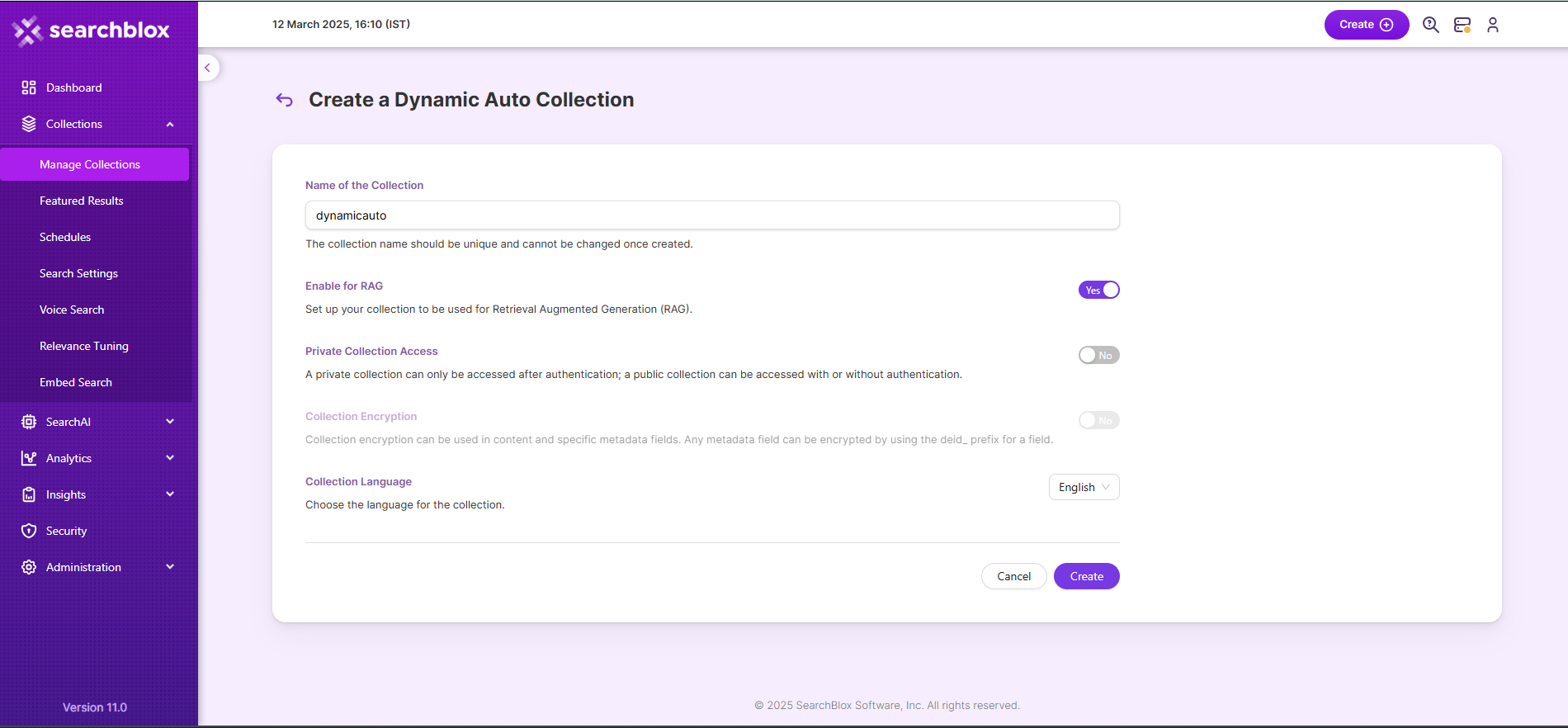

Creating a Dynamic Auto Collection

To create a Dynamic Auto Collection, follow these steps:

- Log in to the Admin Console

- Navigate to the Collections tab and click either "Create a New Collection" or the "+" icon

- Select "Dynamic Auto Collection" as the Collection Type

- Enter a unique name for your collection (e.g., "DynamicAuto")

- Configure RAG settings:

- Enable for Hybrid RAG search

- Disable if not required

- Set access permissions:

- Choose between Private or Public Collection Access

- Configure Collection Encryption based on your security requirements

- Select the content language (if other than English)

- Click Save to create the collection

- Once the Dynamic Auto collection is created you will be taken to the Path tab.

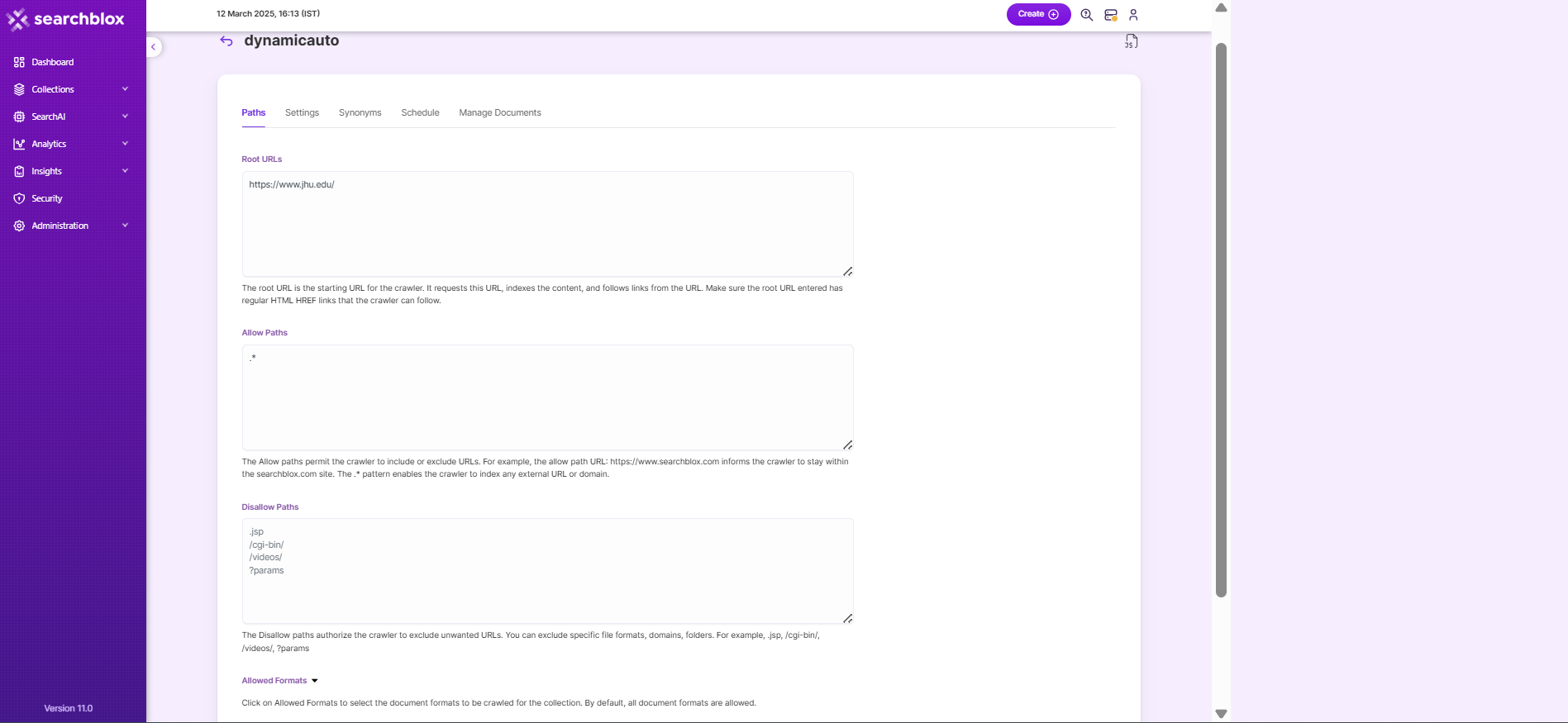

Dynamic Auto Collection Paths

The Dynamic Auto collection Paths allow you to configure the Root URLs and the Allow/Disallow paths for the crawler. To access the paths for the WEB collection, click on the collection name in the Collections list.

Root URLs

- The root URL is the starting URL for the crawler. It requests this URL, indexes the content, and follows links from the URL.

- The root URL should be single url, we can crawl linked with that url.

- Make sure the root URL entered has regular HTML HREF links that the crawler can follow.

Allow/Disallow Paths

- Allow/Disallow paths ensure the crawler can include or exclude URLs.

- Allow and Disallow paths make it possible to manage a collection by excluding unwanted URLs.

- It is mandatory to give an allow path in Dynamic Auto collection to limit the indexing within the subdomain provided in Root URLs.

| Field | Description |

|---|---|

| Root URLs | The starting URL for the crawler. You need to provide at least one root URL. |

| Allow Paths | http://www.cnn.com/ (Informs the crawler to stay within the cnn.com site.) .* (Allows the crawler to go any external URL or domain.) |

| Disallow Paths | .jsp /cgi-bin/ /videos/ ?params |

| Allowed Formats | Select the document formats that need to be searchable within the collection. |

Important Note:

- Enter the Root URL domain name (for example cnn.com or nytimes.com) within the Allow Paths to ensure the crawler stays within the required domains.

- If .* is left as the value within the Allow Paths, the crawler will go to any external domain and index the web pages.

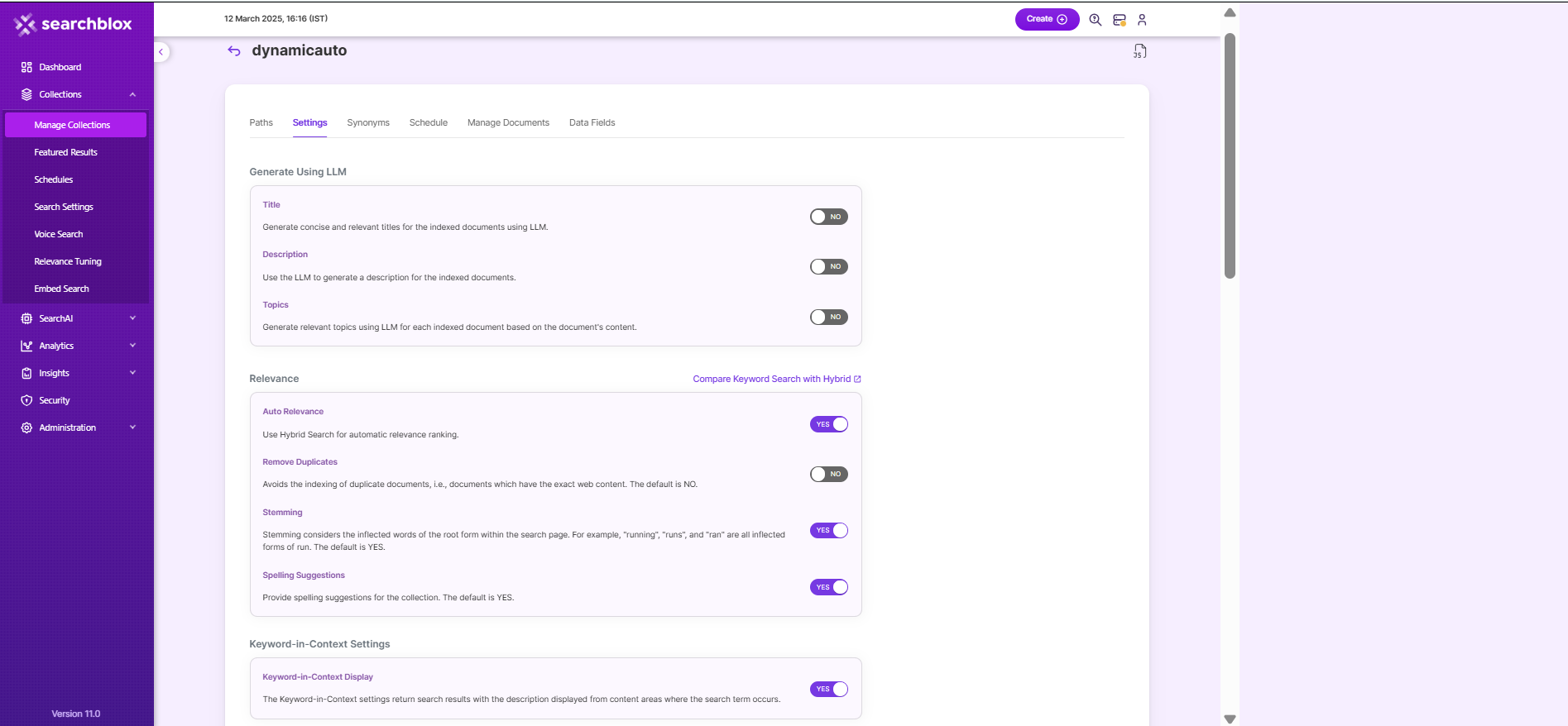

Dynamic Auto Collection Settings

The Settings page contains configurable parameters for the SearchBlox crawler. Default parameters are automatically applied when a new collection is created, but most settings can be customized to meet your specific requirements.

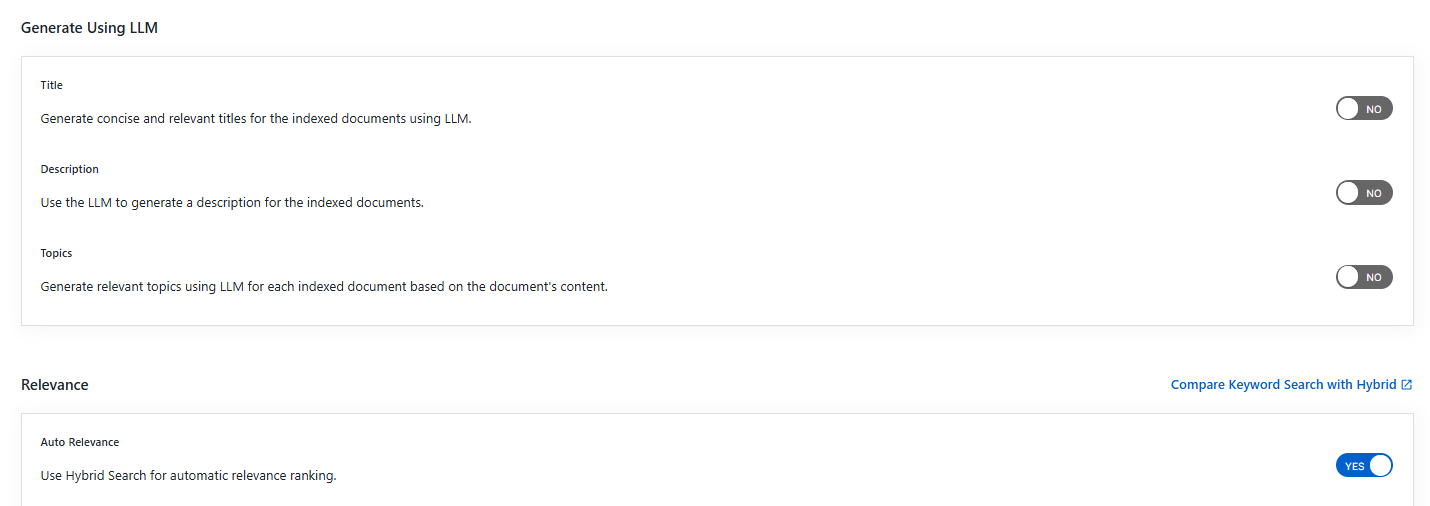

Generate Title, Description and Topics using SearchAI PrivateLLM and Enable Hybrid Search:

- Choose and enable

Generate Using LLMandAuto Relevance

- By clicking

Compare Keyword Search with Hybridlink, will redirect to the Comparison Plugin

Settings Description Title Generates concise and relevant titles for the indexed documents using LLM. Description Generates the description for indexed documents using LLM. Topic Generates relevant topics for indexed documents using LLM based on document's content. Auto Relevance Enable/Disable Hybrid Search for automatic relevance ranking

| Section | Setting | Description |

|---|---|---|

| Relevance | Auto Relevance | Enable/Disable Hybrid Search for automatic relevance ranking |

| Relevance | Remove Duplicates | When enabled, prevents indexing duplicate documents. |

| Relevance | Stemming | When stemming is enabled, inflected words are reduced to root form. For example, "running", "runs", and "ran" are the inflected form of run. |

| Relevance | Spelling Suggestions | The spelling suggestions are based on the words found within the search index. By enabling Spelling Suggestion in collection settings, spelling suggestions will appear for the search box in both regular and faceted search. |

| Keyword-in-Context Search Settings | Keyword-in-Context Display | The keyword-in-context returns search results with the description displayed from content areas where the search term occurs. |

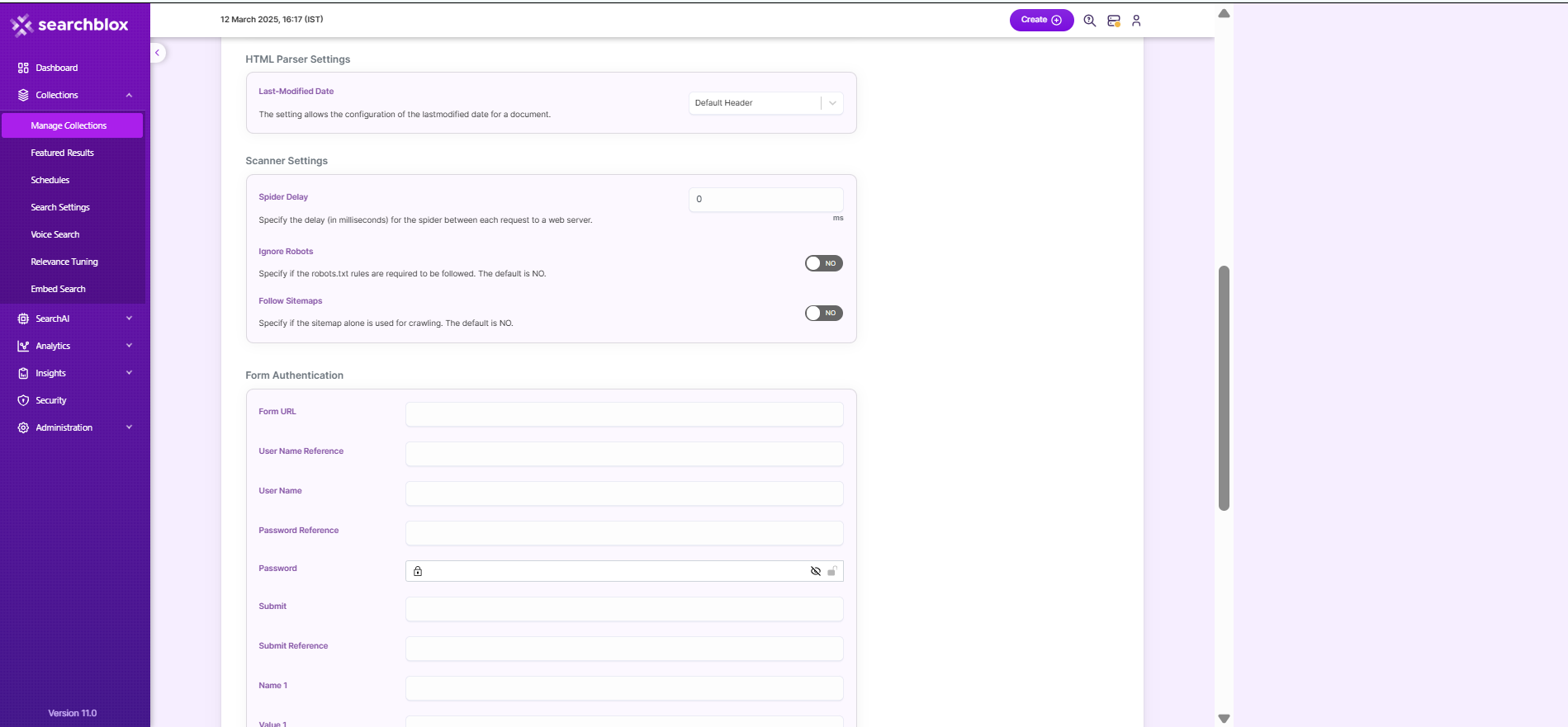

| HTML Parser Settings | Last-Modified Date | This setting determines from where the lastmodified date is fetched for the indexed document. By default it is from webpage header If the user wants it from Meta tags they can select Meta option If the user wants from custom date set in the webserver for SearchBlox then they can select this option |

| Scanner Settings | Spider Delay | Specifies the wait time in milliseconds for the spider between HTTP requests to a web server. |

| Scanner Settings | Ignore Robots | Value is set to Yes or No to tell the spider to obey robot rules or not. The default value is no. |

| Scanner Settings | Follow Sitemaps | Value is set to Yes or No to tell the spider whether sitemaps alone can be indexed, or if all of the URLs have to be indexed respectively. The default value is no. |

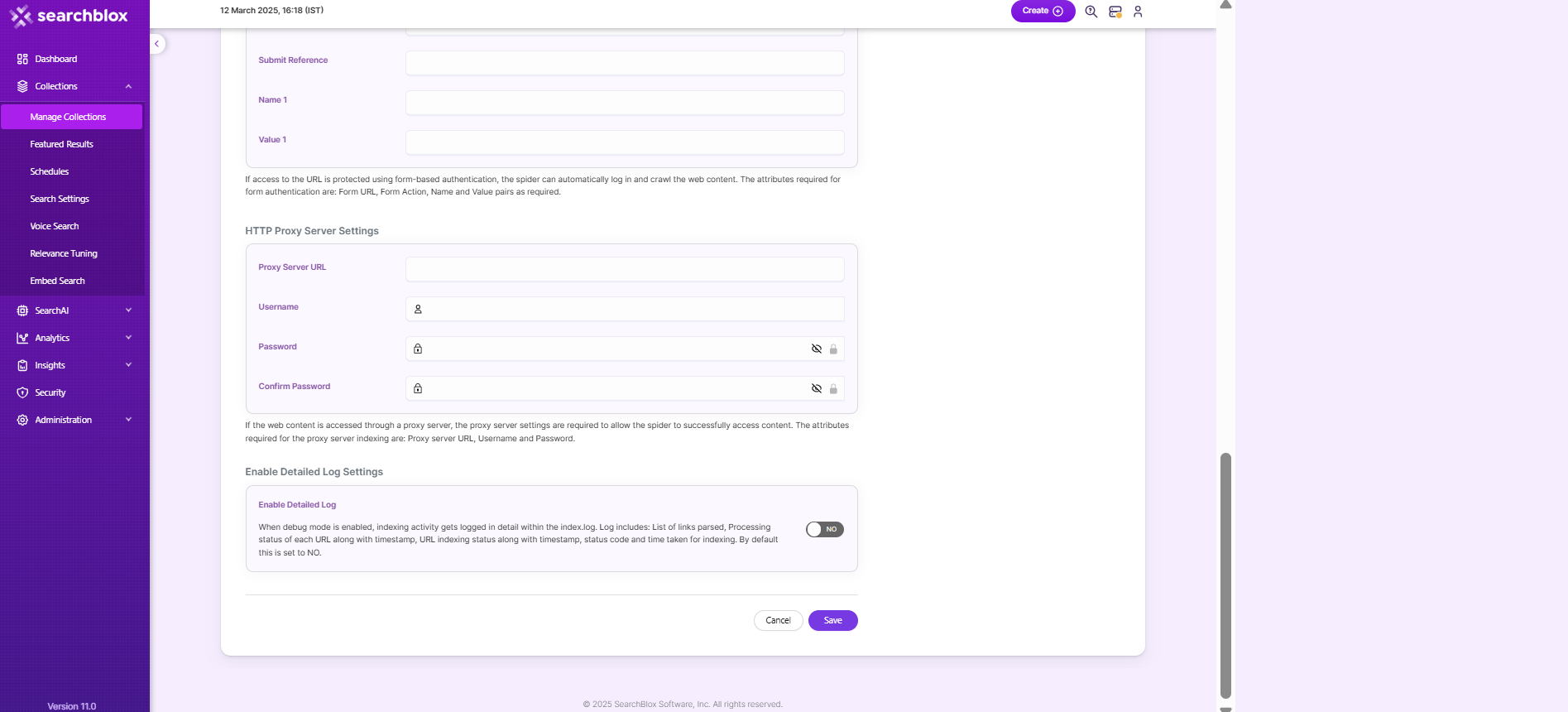

| Form Authentication | Form authentication fields | When access to documents is protected using form-based authentication, the spider can automatically log in and access the documents. The attributes required for form authentication are: Form URL: It should be root url/Landing page url after authentication (crawler get started crawling from this point). Username reference: ID or XPATH of the user name element(we can get it from inspect element of the User name Element) User name: <User_name_input> Password reference: ID or XPATH of the password element(we can get it from inspect element of the password Element) Password: <password> submit: submit (dont change) submit reference: ID or XPATH of the submit element(we can get it from inspect element of the submit Element) name 1: action for wait/iframe (optional) value 1: reference id of wait/iframe element if needed(optional) |

| HTML Proxy server Settings | Proxy server credentials | When HTTP content is accessed through proxy servers, the proxy server settings are required to enable the spider to successfully access and index content. The attributes required for proxy server indexing are: Proxy server URL, Username and Password. |

| Enable Detailed Log Settings | Enable Logging | Provides the indexer activity in detail in ..\webapps\ROOT\logs\index.log. The details that occur in the index.log when logging or debug logging mode are enabled are: List of links that are crawled. Processing done on each URL along with timestamp on when the processing starts, whether the indexing process is taking place or URL gets skipped, and whether the URL gets indexed. All data will be available as separate entries in index.log. Timestamp of when the indexing completed, and the time taken for indexing across the indexed URL entry in the log file. Last modified date of the URL. * If the URL is skipped or not, and why. |

Important for Linux users !

- Before indexing the created collection, navigate to

/opt/searchblox/webapps/ROOT/WEB-INF/authconfig.ymland change theheadlessvalue totrue, as shown below:

headless: true.- Restarting SearchBlox service is required to apply the above changes.

NOTE:

To add the additional time-outs, please update the below parameter in

/webapps/ROOT/WEB-INF/authconfig.yml.

submit-delay:30, where 30 will be in seconds.Restarting SearchBlox service is required to apply the above changes.

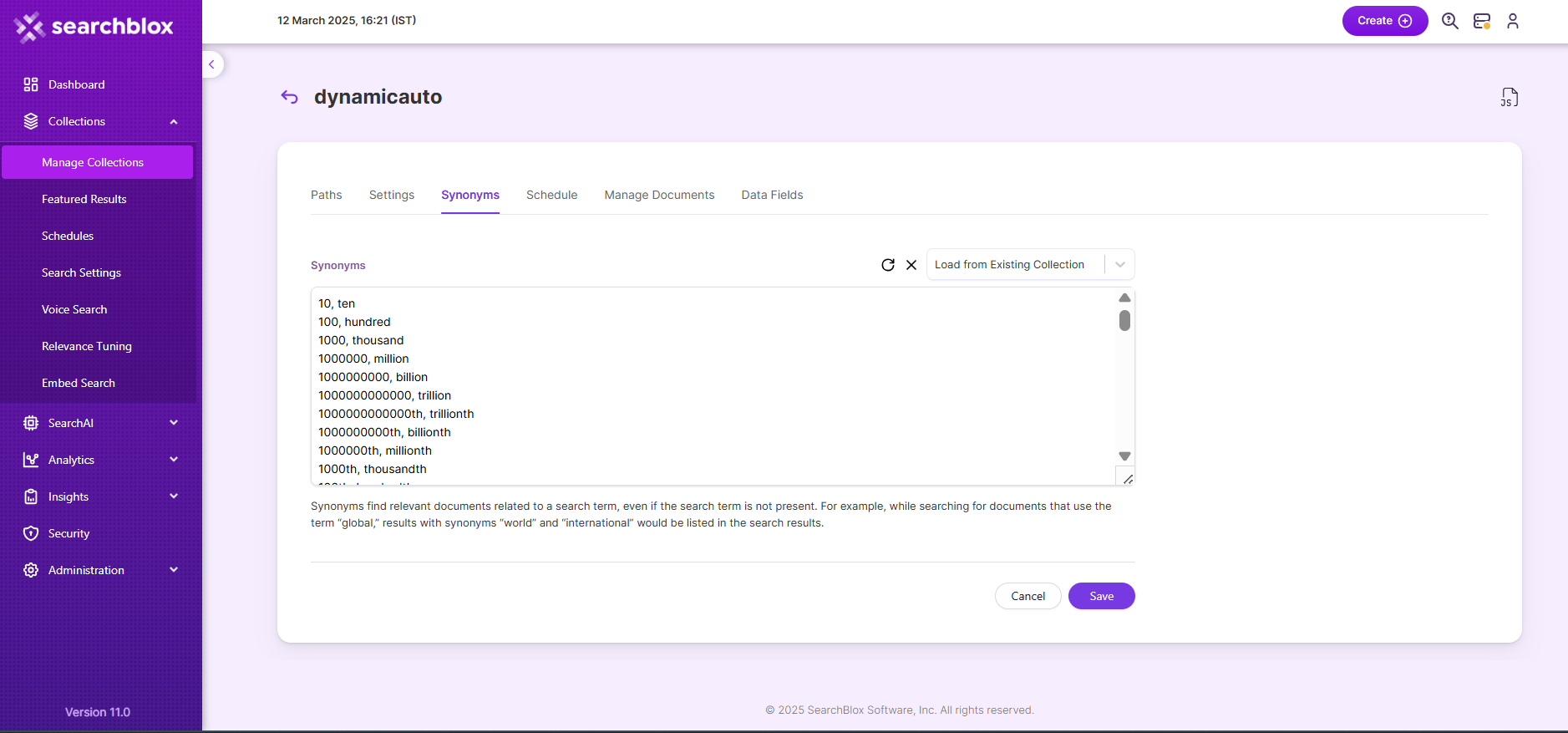

Synonyms

Synonyms find relevant documents related to a search term, even if the search term is not present. For example, while searching for documents that use the term “global,” results with synonyms “world” and “international” would be listed in the search results.

We have an option to load Synonyms from the existing documents.

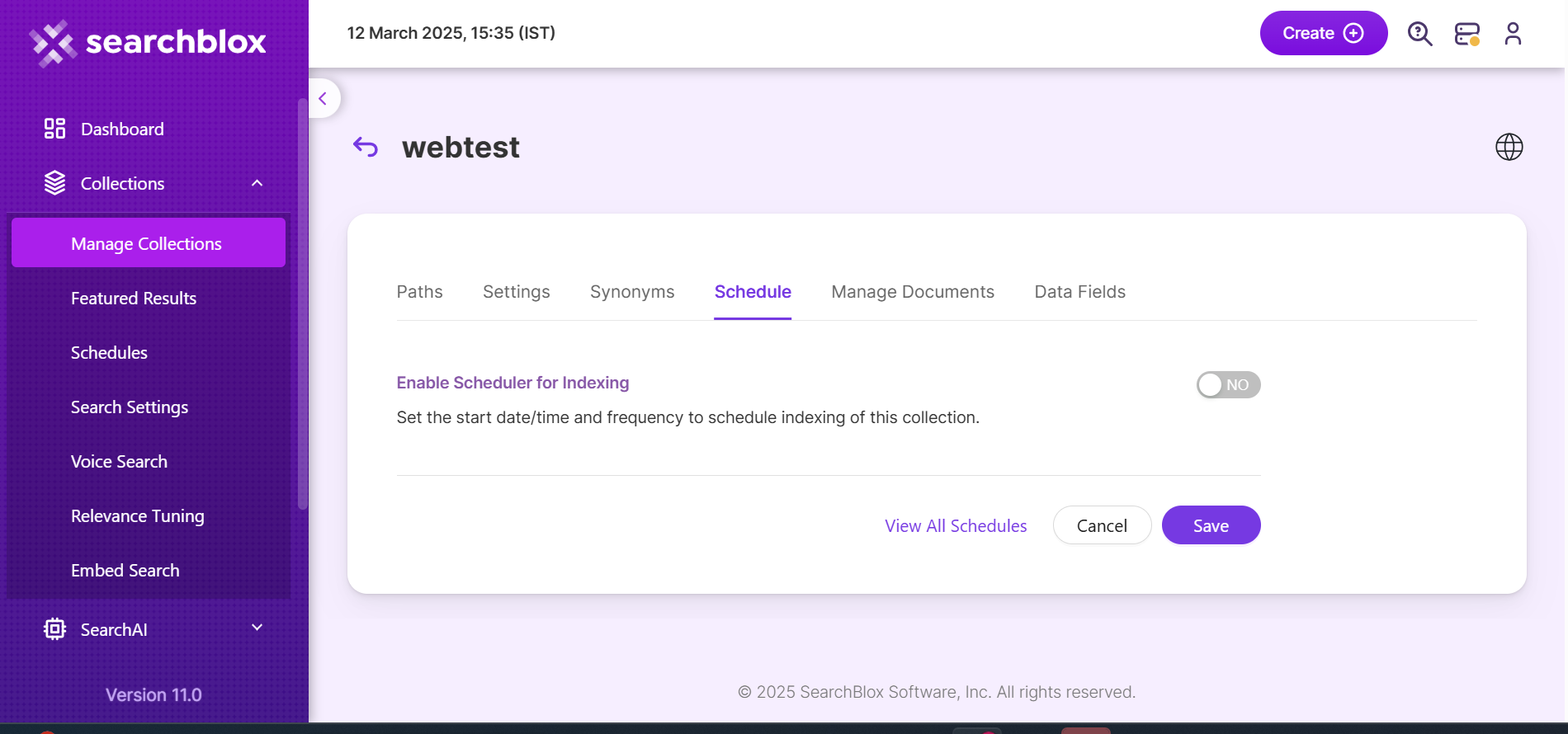

Schedule and Index

Sets the frequency and the start date/time for indexing a collection, from the root URLs. Schedule Frequency supported in SearchBlox is as follows:

- Once

- Hourly

- Daily

- Every 48 Hours

- Every 96 Hours

- Weekly

- Monthly

The following operation can be performed in Dynamic Auto collections

| Activity | Description |

|---|---|

| Enable Scheduler for Indexing | Once enabled, you can set the Start Date and Frequency |

| Save | For each collection, indexing can be scheduled based on the above options. |

| View all Collection Schedules | Redirects to the Schedules section, where all the Collection Schedules are listed. |

Manage Documents

- Using Manage Documents tab we can do the following operations:

- Add/Update

- Filter

- View content

- View meta data

- Refresh

- Delete

-

To add a document click on "+" icon as shown in the screenshot.

-

Enter the document/URL, Click on add/update.

-

Once the document is updated you will be able to see the document URL on the screen and we will be able to perform the above mentioned operations.

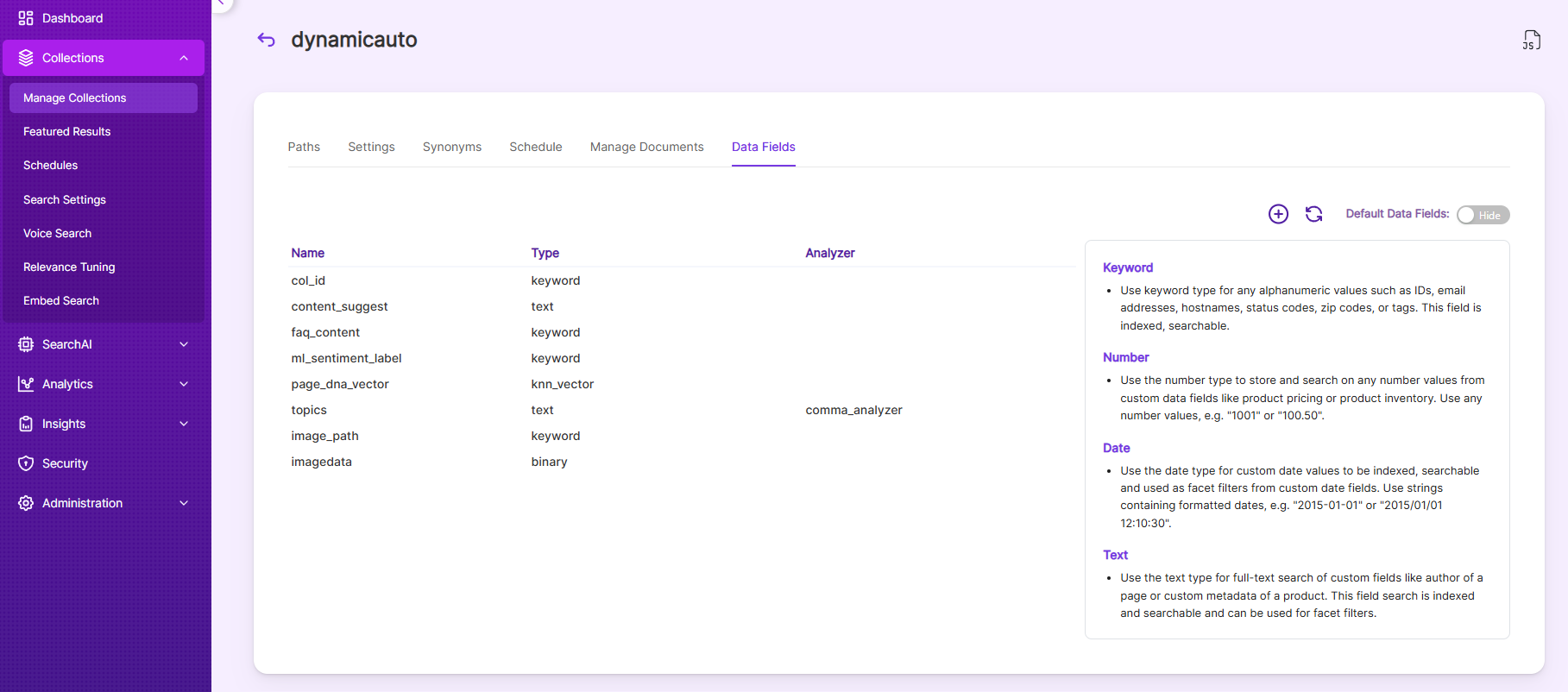

Data Fields

Using Data Fields tab we can create custom fields for search and we can see the Default Data Fields with non-encrypted collection. SearchBlox supports 4 types of Data Fields as listed below:

- Keyword

- Number

- Date

- Text

Note:

Once the Data fields are configured, collection must be cleared and re-indexed to take effect.

To know more about Data Fields please refer to Data Fields Tab

NOTE:

If you face any trouble in indexing

Dynamic Auto Collectionand in logs if you find the following error:

ERROR <xx xxx xxxx 10:56:01,368> <status> <Exception caught on initialize browser: >

com.microsoft.playwright.PlaywrightException: Error { message='

Host system is missing dependencies to run browsers.Run the following command:

sudo apt-get install libxkbcommon0 libgbm1

Updated 9 months ago