Dynamic Collection

SearchBlox can index web content generated dynamically using JavaScript or applications (Single Page Applications SPAs). Dynamic Collection indexing is slower than that of WEB collection due to the dynamic rendering of the pages.

Prerequisites

- Chrome browser has to be installed on the system to use Dynamic Collection.

- Chrome browser version must be 89 or higher.

- Chrome browser version and the Chrome driver version found in the filepath:

<SearchBlox -Installation-Path>\webapps\ROOT\driversmust match.

Installation

- Install Chrome by running the following commands.

wget https://dl.google.com/linux/direct/google-chrome-stable_current_x86_64.rpm

sudo yum install ./google-chrome-stable_current_*.rpm

To check installed Chrome version

google-chrome-stable -version

sudo apt-get install -y libappindicator1 fonts-liberation

sudo apt-get install -f

wget https://dl.google.com/linux/direct/google-chrome-stable_current_amd64.deb

sudo dpkg -i google-chrome*.deb

Windows

- Download the required driver from here.

- Rename the downloaded file to chromedriver_win.exe and place it in

C:\SearchBloxServer\webapps\ROOT\drivers

Linux

- Download the required driver from here.

- Rename the downloaded file to chromedriver_linux and place the application in

/opt/searchblox/webapps/ROOT/drivers - Provide SearchBlox user permissions using the following commands.

chown -R searchblox:searchblox chromedriver_linux

chmod -R 755 chromedriver_linux

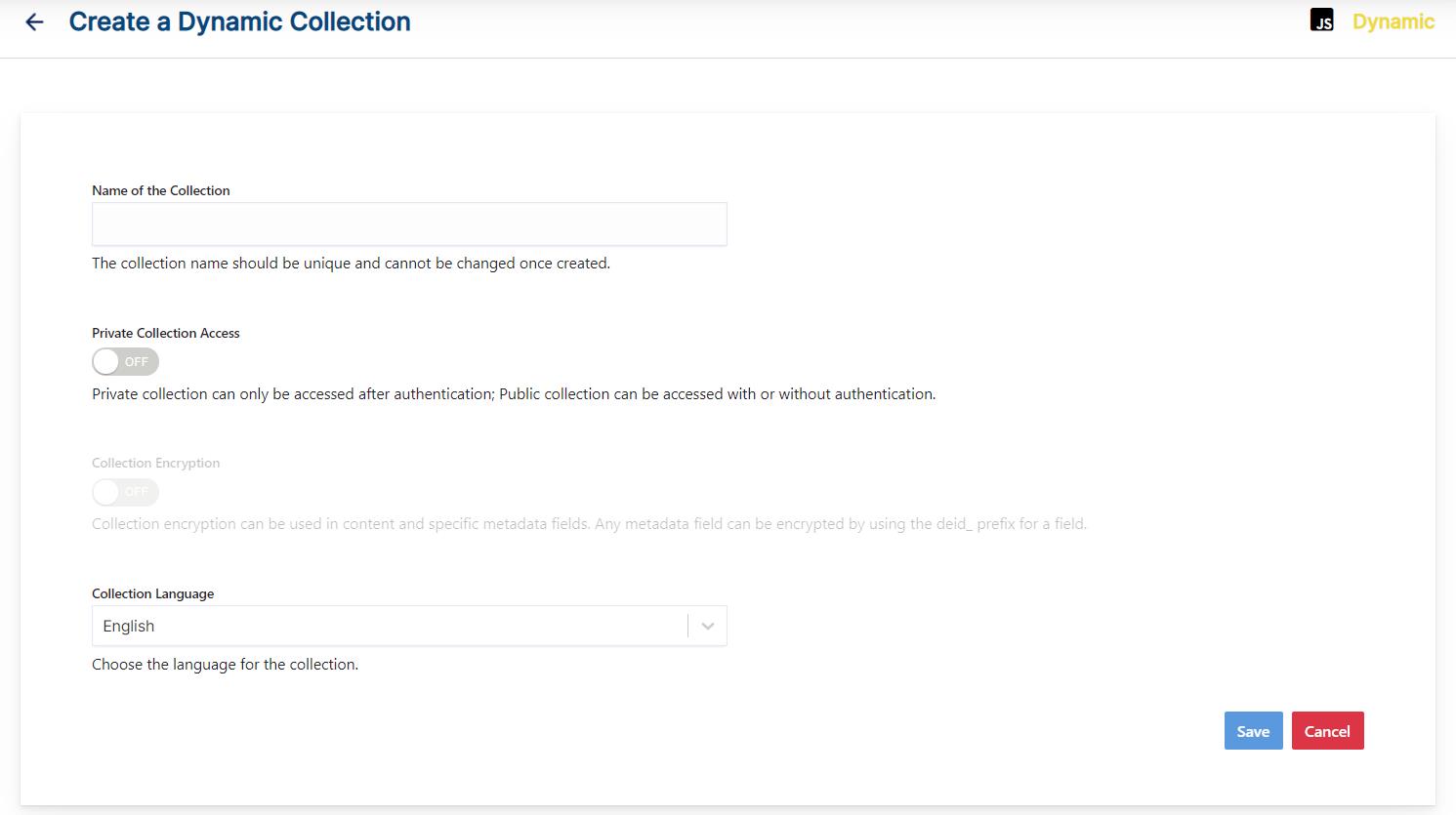

Creating Dynamic Collection

You can Create a Dynamic Collection with the following steps:

- After logging in to the Admin Console, select the Collections tab and click on Create a New

Collection or "+" icon. - Choose Dynamic Collection as Collection Type.

- Enter a unique name for your collection.

- Choose Private/Public Collection Access and Collection Encryption as per the requirements.

- Choose the language of the content (if the language is other than English).

- Click Save to create the collection.

- Once the Dynamic Collection is created you will be taken to the Path tab

Dynamic Collection Paths

The Dynamic Collection Paths allow you to configure the Root URLs and the Allow/Disallow paths for the crawler. To access the paths for the Dynamic Collection, click on the collection name in the Collections list.

Root URLs

- The root URL is the starting URL for the crawler. It requests this URL, indexes the content, and follows links from the URL.

- The root URL entered should have regular HTML HREF links that the crawler can follow.

- In the Paths sub-tab, enter at least one root URL for the Dynamic Collection in the Root URLs.

Allow/Disallow Paths

- Allow/Disallow paths ensure the crawler can include or exclude URLs.

- Allow and Disallow paths make it possible to manage a collection by excluding unwanted URLs.

- It is mandatory to give an allow path in Dynamic collection to limit the indexing within the subdomain provided in Root URLs.

| Field | Description |

|---|---|

| Root URLs | The starting URL for the crawler. You need to provide at least one root URL. |

| Allow Paths | http://www.cnn.com/ (Informs the crawler to stay within the cnn.com site.) .* (Allows the crawler to go any external URL or domain.) |

| Disallow Paths | .jsp /cgi-bin/ /videos/ ?params |

| Allowed Formats | Select the document formats that need to be searchable within the collection. |

Note

- Keep the crawler within the required domain(s).

- Enter the Root URL domain name(s) (for example cnn.com or nytimes.com) within the Allow Paths to ensure the crawler stays within the required domains.

- If .* is left as the value within the Allow Paths, then the crawler will go to any external domain and index the web pages.

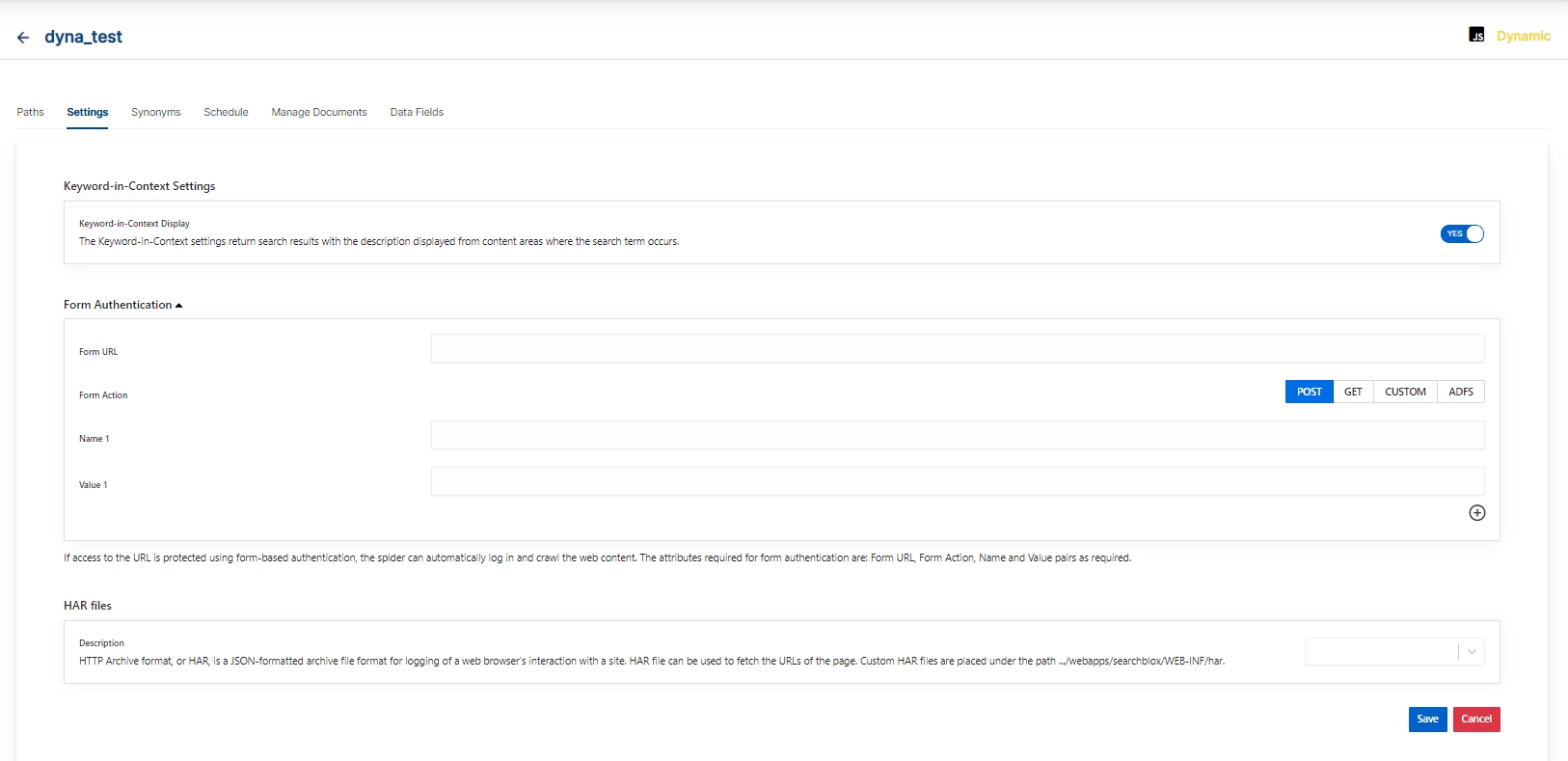

Dynamic Collection Settings

- When comparing with Web collection, most of the settings for WEB Collection will not be available for Dynamic Collection. Keyword-in-Context Display, Form Authentication and an option to upload the HAR files to index the dynamically generated content are supported with Dynamic Collection.

- HAR is an HTTP archive file that can be downloaded from a dynamically generated website. This file has to be copied and pasted into the "har" folder in WEB-INF.

- After the SearchBlox restart, the HAR file can be selected from Dynamic Collection settings for indexing.

| Section | Setting | Description |

|---|---|---|

| Keyword-in-Context Search Settings | Keyword-in-Context Display | The keyword-in-context returns search results with the description displayed from content areas where the search term occurs. |

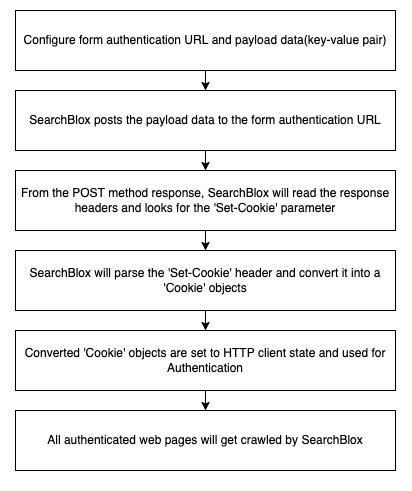

| Form Authentication | Form Authentication | If access to the URL is protected using form-based authentication, the spider can automatically log in and crawl the web content. The attributes required for form authentication are: Form URL, Form Action, Name and Value pairs as required. |

| HAR files | HAR files | This HAR file that is required to fetch the URLs of the page must be selected here. Please note that it is required to copy the downloaded HAR file into ../WEB-INF/har folder |

| Enable Content API | Enable Content API | Provides the ability to crawl the content with special characters included. |

Form Authentication Work Flow

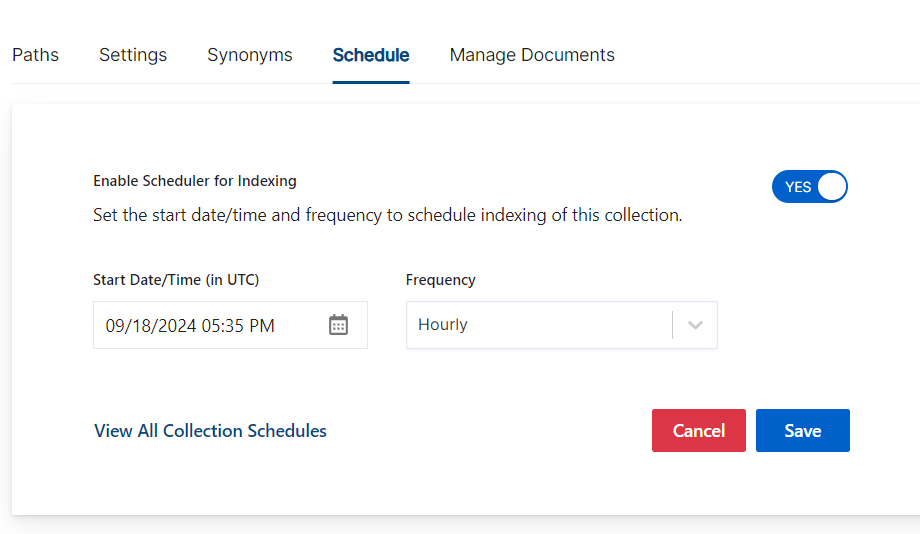

Schedule and Index

Sets the frequency and the start date/time for indexing a collection, from the root URLs. Schedule Frequency supported in SearchBlox is as follows:

- Once

- Hourly

- Daily

- Every 48 Hours

- Every 96 Hours

- Weekly

- Monthly

A Dynamic Collection can be Scheduled for indexing on-demand, or through API requests.

Best Practices

- Dynamic Collection is recommended only for content generated using JavaScript (dynamically generated).

- Use WEB Collection for static web content.

- Do not schedule the same time for two collection operations (Index).

- If you have multiple collections, always schedule the activity to index for not more than 3 collections at the different time intervals.

Updated about 1 year ago