HTTP Collection

SearchBlox includes a web crawler to index content from any intranet, portal, or website. The crawler can also index HTTPS-based content without any additional configuration, and crawl through a proxy server or HTTP Basic Authentication/Form Authentication.

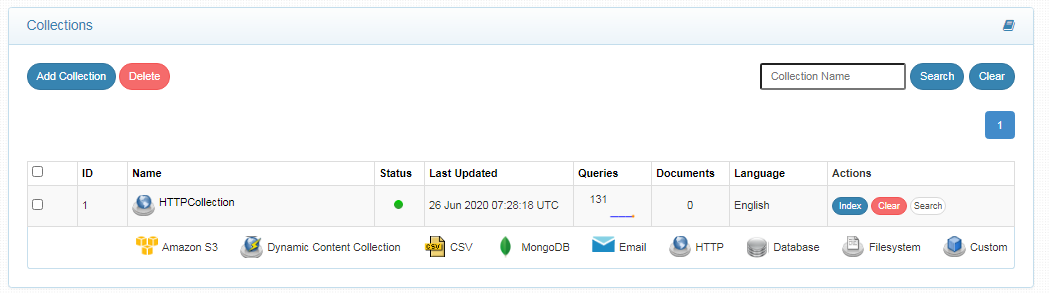

Creating HTTP Collection

- After logging in to the Admin Console, click Add Collection button.

- Enter a unique Collection name for the data source (for example, intranetsite).

- Choose HTTP Collection as Collection Type.

- Choose the language of the content (if the language is other than English).

- Click Add to create the collection.

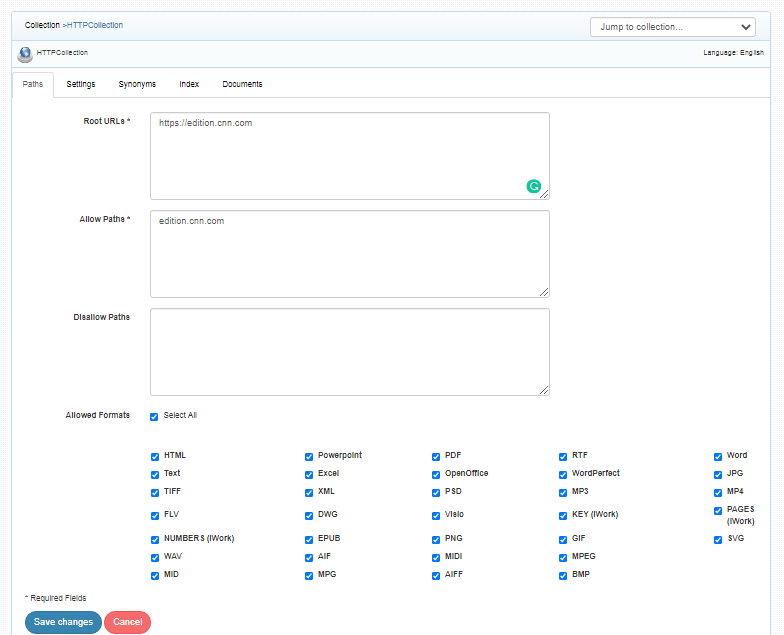

HTTP Collection Paths

The HTTP collection Paths allow you to configure the Root URLs and the Allow/Disallow paths for the crawler. To access the paths for the HTTP collection, click on the collection name in the Collections list.

Root URLs

- The root URL is the starting URL for the crawler. It requests this URL, indexes the content, and follows links from the URL.

- Make sure the root URL entered has regular HTML HREF links that the crawler can follow.

- In the paths sub-tab, enter at least one root URL for the HTTP Collection in the Root URLs.

Allow/Disallow Paths

- Allow/Disallow paths ensure the crawler can include or exclude URLs.

- Allow and Disallow paths make it possible to manage a collection by excluding unwanted URLs.

- It is mandatory to give an allow path in HTTP collection to limit the indexing within the subdomain provided in Root URLs.

| Field | Description |

|---|---|

| Root URLs | The starting URL for the crawler. You need to provide at least one root URL. |

| Allow Paths | http://www.cnn.com/ (Informs the crawler to stay within the cnn.com site.) .* (Allows the crawler to go any external URL or domain.) |

| Disallow Paths | .jsp /cgi-bin/ /videos/ ?params |

| Allowed Formats | Select the document formats that need to be searchable within the collection. |

Important Note:

- Enter the Root URL domain name(s) (for example cnn.com or nytimes.com) within the Allow Paths to ensure the crawler stays within the required domains.

- If .* is left as the value within the Allow Paths, the crawler will go to any external domain and index the web pages.

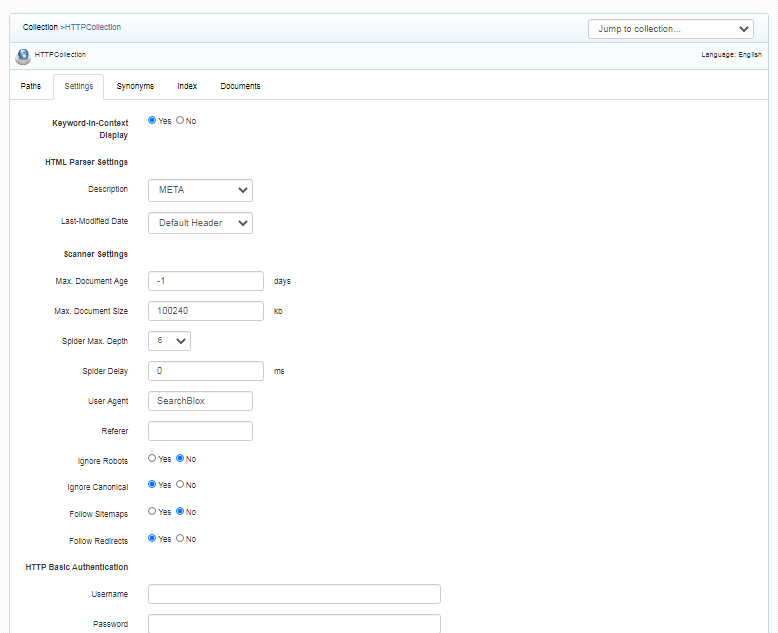

HTTP Collection Settings

The Settings page has configurable parameters for the crawler. SearchBlox provides default parameters when a new collection is created. Most crawler settings can be changed for your specific requirements.

| Section | Setting | Description |

|---|---|---|

| Keyword-in-Context Search Settings | Keyword-in-Context Display | The keyword-in-context returns search results with the description displayed from content areas where the search term occurs. |

| HTML Parser Settings | Description | This setting configures the HTML parser to read the description for a document from one of the HTML tags: H1, H2, H3, H4, H5, H6 |

| HTML Parser Settings | Last-Modified Date | This setting determines from where the lastmodified date is fetched for the indexed document. By default it is from webpage header If the user wants it from Meta tags they can select Meta option If the user wants from custom date set in the webserver for SearchBlox then they can select this option |

| Scanner Settings | Maximum Document Age | Specifies the maximum allowable age in days of a document in the collection. |

| Scanner Settings | Maximum Document Size | Specifies the maximum allowable size in kilobytes of a document in the collection. |

| Scanner Settings | Maximum Spider Depth | Specifies the maximum depth the spider is allowed to proceed to index documents. Maximum value of Spider depth that can be given in SearchBlox is 15 |

| Scanner Settings | Spider Delay | Specifies the wait time in milliseconds for the spider between HTTP requests to a web server. |

| Scanner Settings | User Agent | The name under which the spider requests documents from a web server. |

| Scanner Settings | Referrer | This is a URL value set in the request headers to specify where the user agent previously visited. |

| Scanner Settings | Ignore Robots | Value is set to Yes or No to tell the spider to obey robot rules or not. The default value is no. |

| Scanner Settings | Ignore Canonical | Value is set to Yes or No to tell the spider to ignore canonical urls specified in the page. The default value is yes. |

| Scanner Settings | Follow Sitemaps | Value is set to Yes or No to tell the spider whether sitemaps alone can be indexed, or if all of the URLs have to be indexed respectively. The default value is no. |

| Scanner Settings | Follow Redirects | Is set to Yes or No to instruct the spider to automatically follow redirects or not. |

| Relevance | Boosting | Boost search terms for the collection by setting a value greater than 1 (maximum value 9999). |

| Relevance | Remove Duplicates | When enabled, prevents indexing duplicate documents. |

| Relevance | Stemming | When stemming is enabled, inflected words are reduced to root form. For example, "running", "runs", and "ran" are the inflected form of run. |

| Relevance | Spelling Suggestions | The spelling suggestions are based on the words found within the search index. By enabling Spelling Suggestion in collection settings, spelling suggestions will appear for the search box in both regular and faceted search. |

| Relevance | Enable Logging | Provides the indexer activity in detail in ../searchblox/logs/index.log. The details that occur in the index.log when logging or debug logging mode are enabled are: List of links that are crawled. Processing done on each URL along with timestamp on when the processing starts, whether the indexing process is taking place or URL gets skipped, and whether the URL gets indexed. All data will be available as separate entries in index.log. Timestamp of when the indexing completed, and the time taken for indexing across the indexed URL entry in the log file. Last modified date of the URL. * If the URL is skipped or not, and why. |

| HTTP Basic Authentication | Basic Authentication credentials | When the spider requests a document, the spider presents these values (user/password) to the HTTP server in the Authorization MIME header. The attributes required for basic authentication are Username and Password. |

| Form Authentication | Form authentication fields | When access to documents is protected using form-based authentication, the spider can automatically log in and access the documents. The attributes required for form authentication are: Form URL, Form Action, Name and Value pairs as required. |

| Proxy server Indexing | Proxy server credentials | When HTTP content is accessed through proxy servers, the proxy server settings are required to enable the spider to successfully access and index content. The attributes required for proxy server indexing are: Proxy server URL, Username and Password. |

Metatags Customization

HTTP Collection crawler/parser can be controlled using the following HTML markup tags.

| HTML Tags | Description |

|---|---|

| Robots Meta Tag | These tags in the HTML page specify whether SearchBlox can or cannot index the page, and can or cannot spider the entire website. The different types of robots meta tags are as shown:<meta name="robots" content="index, follow"><meta name="robots" content="noindex, follow"><meta name="robots" content="index, nofollow"><meta name="robots" content="noindex, nofollow"> |

| NoIndex/ StopIndex Tags | With HTTP collections, there is often a requirement to exclude content from sections of an HTML page from being indexed, such as headers, footers, and navigation. SearchBlox provides three ways to achieve this.<noindex> Content to Exclude</noindex><!--stopindex-->Content to Exclude <!--startindex--><!--googleoff: all-->Content to Exclude<!--googleon: all--> |

| Canonical Tag | Canonical is specified in the link element in order to set the preferred URL in HTML pages that are copies or duplicates. Eg: <link rel="canonical" href="http://sample.com"/>Note: This canonical tag would be ignored by default in the collection which can be enabled by setting the ignore canonical value as No in collection settings |

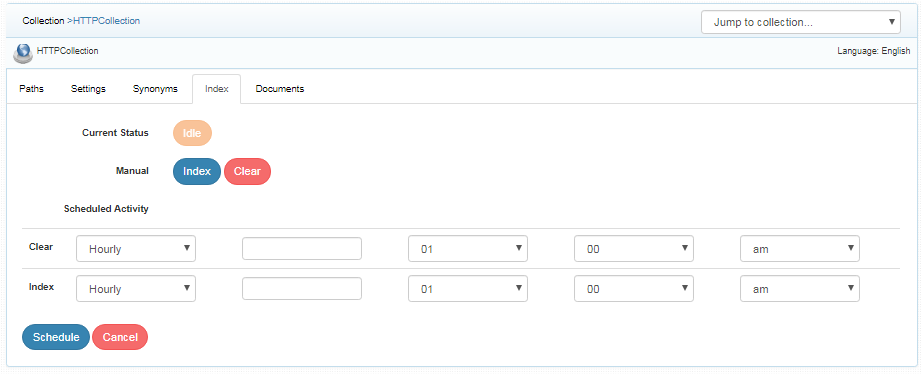

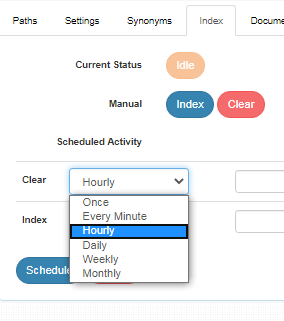

Index and Other Operations

An HTTP Collection can be indexed or cleared on-demand, on a schedule, or through API requests.

| Activity | Description |

|---|---|

| Index | Starts the indexer for the selected collection. Starts indexing from the root URLs. |

| Clear | Clears the current index for the selected collection. |

| Scheduled Activity | For each collection, any of the following scheduled indexer activity can be set: Index - Set the frequency and the start date/time for indexing a collection. Clear - Set the frequency and the start date/time for clearing a collection. |

- Indexer activity is controlled from the Index sub-tab in the collection.

- The current status of an indexer for a particular collection is indicated in the index sub-tab.

- Indexing operation starts the indexer for the selected collection. The indexing starts from the root URLs.

- On reindexing i.e., clicking on index again after the initial index operation, all crawled documents will be reindexed. If documents have been deleted from the source website or directory since the first index operation, they will be deleted from the index. New documents will also be indexed.

- Also, indexing is controlled from the Index sub-tab for a collection or through API. The current status of a collection is always indicated on the Collection Dashboard and the Index page.

- Index operation can also be initiated from the Collection Dashboard.

- Scheduling can be performed only from the Index sub-tab or API.

Schedule Frequency

Schedule Frequency supported in SearchBlox is as follows:

- Once

- Every Minute

- Hourly

- Daily

- Every 48 Hours

- Every 96 Hours

- Weekly

- Monthly

Best Practices for Scheduling Index of Collections

- Do not schedule the same time for index and clear operations

- If you have multiple collections, always schedule the activity to prevent more than 2-3 collections indexing at the same time.

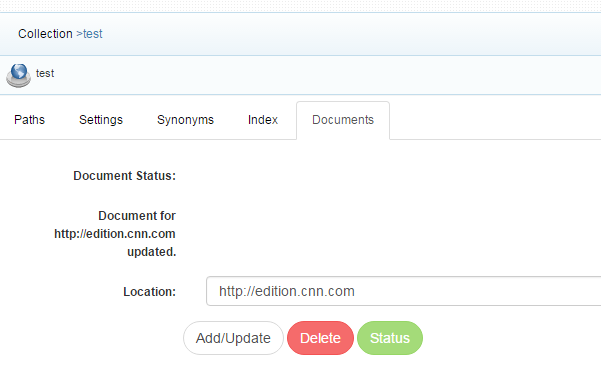

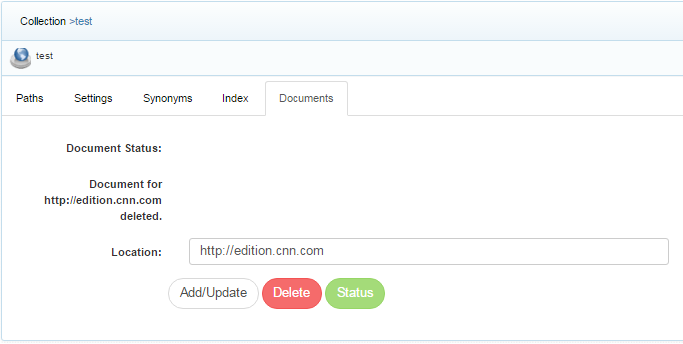

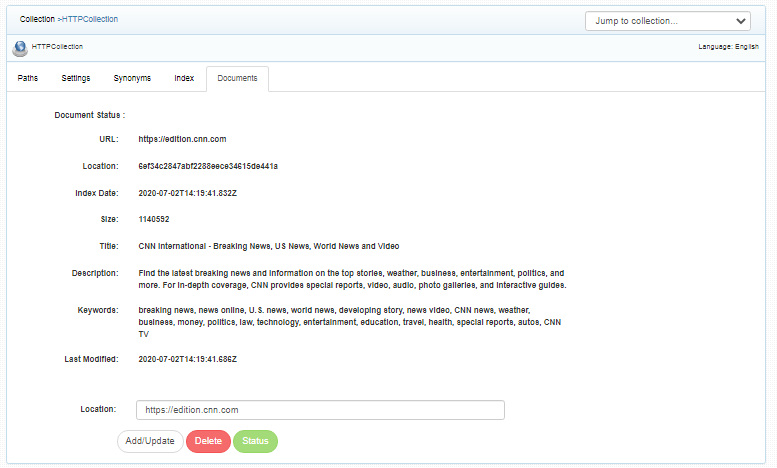

Add Documents

- Using Documents tab one can manually add/update/delete a document/URL through the admin console for an HTTP System collection.

- To go to add documents tab, click a collection and select the last tab with the name Documents

- Please note that this only adds the individual URL and does not start crawling from the URL.

- To add a URL to your collection, enter the web address, and click "Add/Update":

- To delete a URL from your collection, enter the web address and click "Delete.

- To see the status of a URL, click "Status".

Updated almost 5 years ago